记录一下一个 Telemetry 的 demo,使用 pipeline+influxDB+Grafana,构建一个可视化监控。

- pipeline: 用于采集设备的 telemetry 信息。

- influxDB: 使用数据库的方式存储 telemetry 采集到的信息

- Grafana: 将数据库采集的信息图像化输出.

安装与配置 #

Pipeline: #

使用的是“bigmuddy-network-telemetry-pipeline”,bigmuddy-network-telemetry-pipeline 这个项目被存档了, 我 fork 了下, 所以可以使用以下的命令去 get 这个项目:

git clone https://github.com/xuxing3/bigmuddy-network-telemetry-pipeline.git

配置文件如下:

[root@xuxing239 bigmuddy-network-telemetry-pipeline]# grep -v ^# pipeline-influxdb.conf | grep -v ^$

[default]

id = pipeline

metamonitoring_prometheus_resource = /metrics

metamonitoring_prometheus_server = :8989

[testbed]

stage = xport_input

type = tcp

encap = st

listen = :5432 <<<< 监听5432 端口, 可以更改

[inspector]

stage = xport_output

type = tap

file = /opt/dump-xuxing.txt <<<<<采集到的 telemetry的数据存储的位置

datachanneldepth = 1000

[metrics_influx]

stage = xport_output

type = metrics

file = metrics-nms.json <<<<<< 将采集到telemetry 的信息使用该文件定义的格式, 格式化输出到influxdb

dump = metrics-dump-xuxing.txt <<<< 格式化输出之前会dump一份数据存储在该文件中

output = influx

influx = http://10.70.80.197:8086 <<<<<<< influxdb, 默认端口为8086

database = mdt_db <<<<<<< 定义infludb 那个数据库用于存储telemetry信息

metrics-nms.json 格式如下, 如何去定义这个 json 文件, 我目前能找到方法也只是参考 pipline 采集到数据自己去修改 json 文件。metrics 文件的意义在于翻译采集到的信息, 并导入数据库。

[root@xuxing bigmuddy-network-telemetry-pipeline]# cat metrics-nms.json

[

{

"basepath" : "Cisco-IOS-XR-asr9k-np-oper:hardware-module-np/nodes/node/nps/np/counters",

"spec" : {

"fields" : [

{"name":"node-name", "tag": true}, <<<<<<< tag 为 true 是为了设置后续可以按照哪个参数去filter 数据

{"name":"np-name"},

{

"name":"np-counter",

"fields" : [

{"name":"counter-name", "tag": true}, <<<<<<

{"name":"counter-value"},

{"name":"rate"},

{"name":"counter-type"},

{"name":"counter-index"}

]

}

]

}

}

]

InfluxDB/Grafana #

docker run -d -v grafana-storage:/var/lib/grafana -p 3000:3000 grafana/grafana:5.4.3

docker run -d --volume=/opt/influxdb:/data -p 8080:8083 -p 8086:8086 tutum/influxdb

这里使用 docker 的方式,run 起来后访问 http://

- 创建 mdt_db database, 并修改 database 数据存储的时间

CREATE DATABASE "mdt_db"

CREATE RETENTION POLICY "24h" ON "db_name" DURATION 1d REPLICATION 1 DEFAULT #1 day

CREATE RETENTION POLICY "one_month" ON "mdt_db" DURATION 30d REPLICATION 1 DEFAULT # one_month

其他的一些常用命令:

SHOW MEASUREMENTS

select * from ""

SHOW RETENTION POLICIES ON "mdt_db"

InfluxDB 命令参考以下文章

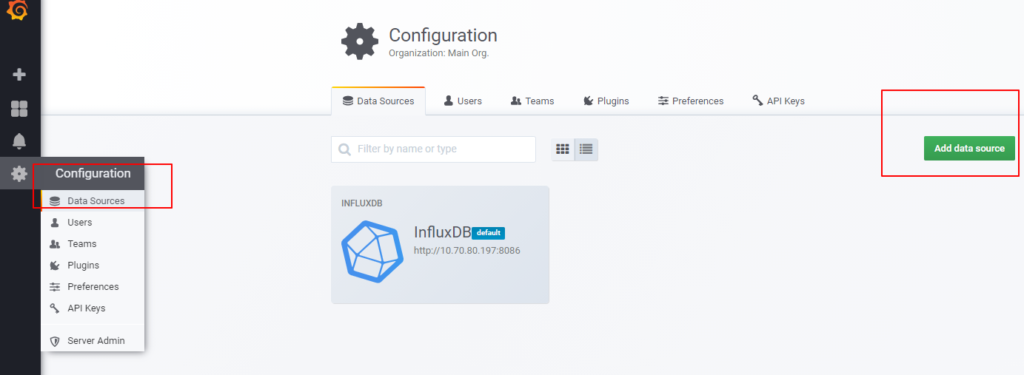

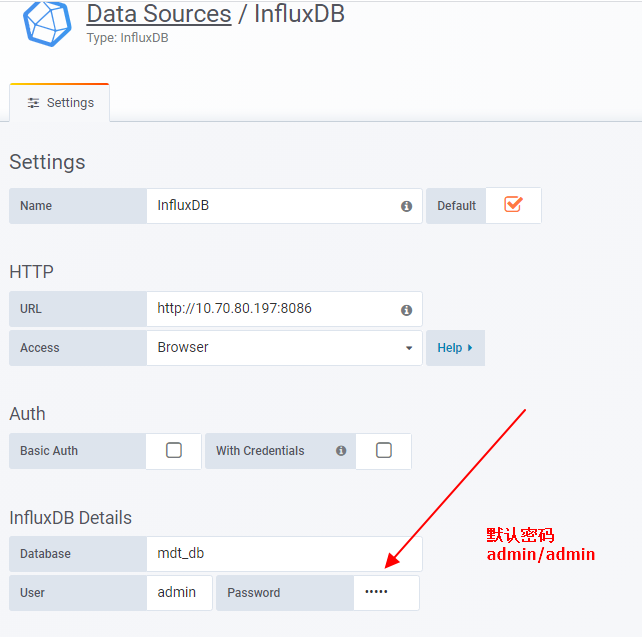

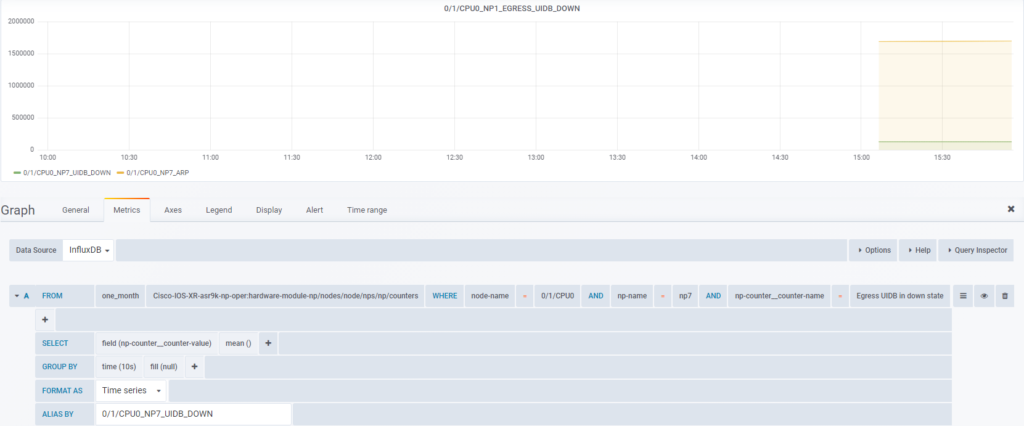

- Grafana 连接数据库

Telemetry 数据采集 #

IOX 设备配置如下: #

RP/0/RSP1/CPU0:ASR9906-B#show run telemetry model-driven

Wed May 19 07:52:54.048 UTC

telemetry model-driven

destination-group xuxing

vrf calo-mgmt

address-family ipv4 10.70.80.197 port 5432 <<<<<<

encoding self-describing-gpb

protocol tcp

!

sensor-group xuxing

sensor-path Cisco-IOS-XR-asr9k-np-oper:hardware-module-np/nodes/node/nps/np/counters/np-counter

!

subscription xuxing

sensor-group-id xuxing strict-timer

sensor-group-id xuxing sample-interval 5000

destination-id xuxing

source-interface MgmtEth0/RSP1/CPU0/0

Server 配置 #

[root@xuxing bigmuddy-network-telemetry-pipeline]# ./bin/pipeline -log= -debug -config=pipeline-influxdb.conf

INFO[2021-05-19 00:58:24.900661] Conductor says hello, loading config config=pipeline-influxdb.conf debug=true fluentd= logfile= maxthreads=12 tag=pipeline version="v1.0.0(bigmuddy)"

DEBU[2021-05-19 00:58:24.901561] Conductor processing section... name=conductor section=inspector tag=pipeline

DEBU[2021-05-19 00:58:24.901576] Conductor processing section, type... name=conductor section=inspector tag=pipeline type=tap

INFO[2021-05-19 00:58:24.901588] Conductor starting up section name=conductor section=inspector stage="xport_output" tag=pipeline

DEBU[2021-05-19 00:58:24.901619] Conductor processing section... name=conductor section="metrics_influx" tag=pipeline

DEBU[2021-05-19 00:58:24.901655] Conductor processing section, type... name=conductor section="metrics_influx" tag=pipeline type=metrics

INFO[2021-05-19 00:58:24.901665] Conductor starting up section name=conductor section="metrics_influx" stage="xport_output" tag=pipeline

INFO[2021-05-19 00:58:24.901676] Metamonitoring: serving pipeline metrics to prometheus name=default resource="/metrics" server=":8989" tag=pipeline

INFO[2021-05-19 00:58:24.901767] Starting up tap countonly=false filename="/opt/dump-xuxing.txt" name=inspector streamSpec=&{2 <nil>} tag=pipeline

CRYPT Client [metrics_influx],[http://10.70.80.197:8086]

Enter username: admin

Enter password:

INFO[2021-05-19 00:58:28.195240] setup authentication authenticator="http://10.70.80.197:8086" name="metrics_influx" pem= tag=pipeline username=admin

INFO[2021-05-19 00:58:28.195357] setup metrics collection basepath="Cisco-IOS-XR-asr9k-np-oper:hardware-module-np/nodes/node/nps/np/counters" name="metrics_influx" tag=pipeline

DEBU[2021-05-19 00:58:28.195371] metrics export configured file=metrics-nms.json metricSpec={[{Cisco-IOS-XR-asr9k-np-oper:hardware-module-np/nodes/node/nps/np/counters 0xc420344e60}] map[Cisco-IOS-XR-asr9k-np-oper:hardware-module-np/nodes/node/nps/np/counters:0xc420344e60] 0xc420328bd0} name="metrics_influx" output=influx tag=pipeline

DEBU[2021-05-19 00:58:28.195429] Conductor processing section... name=conductor section=testbed tag=pipeline

DEBU[2021-05-19 00:58:28.195452] Conductor processing section, type... name=conductor section=testbed tag=pipeline type=tcp

INFO[2021-05-19 00:58:28.195466] Conductor starting up section name=conductor section=testbed stage="xport_input" tag=pipeline

Grafana 简单配置如下: #

其他优化 #

由于 telemetry 的数据过于庞大, 建议将 server 上的数据定期清空, 否则很容易占满磁盘

[root@xuxing bigmuddy-network-telemetry-pipeline]# crontab -l

*/60 * * * * root echo "" > /opt/dump-xuxing.txt

*/60 * * * * root echo "" > /root/bigmuddy-network-telemetry-pipeline/metrics-dump-xuxing.txt_wkid0

[root@xuxing bigmuddy-network-telemetry-pipeline]#